基于python怎么实现单目三维重建

导读:本文共8955字符,通常情况下阅读需要30分钟。同时您也可以点击右侧朗读,来听本文内容。按键盘←(左) →(右) 方向键可以翻页。

摘要: 一、单目三维重建概述客观世界的物体是三维的,而我们用摄像机获取的图像是二维的,但是我们可以通过二维图像感知目标的三维信息。三维重建技术是以一定的方式处理图像进而得到计算机能够识别的三维信息,由此对目标进行分析。而单目三维重建则是根据单个摄像头的运动来模拟双目视觉,从而获得物体在空间中的三维视觉信息,其中,单目即指单个摄像头。二、实现过程在对物体进行单目三维重建的... ...

目录

(为您整理了一些要点),点击可以直达。一、单目三维重建概述

客观世界的物体是三维的,而我们用摄像机获取的图像是二维的,但是我们可以通过二维图像感知目标的三维信息。三维重建技术是以一定的方式处理图像进而得到计算机能够识别的三维信息,由此对目标进行分析。而单目三维重建则是根据单个摄像头的运动来模拟双目视觉,从而获得物体在空间中的三维视觉信息,其中,单目即指单个摄像头。

二、实现过程

在对物体进行单目三维重建的过程中,相关运行环境如下:

matplotlib 3.3.4

numpy 1.19.5

opencv-contrib-python 3.4.2.16

opencv-python 3.4.2.16

pillow 8.2.0

python 3.6.2

其重建主要包含以下步骤:

(1)相机的标定

(2)图像特征提取及匹配

(3)三维重建

接下来,我们来详细看下每个步骤的具体实现:

(1)相机的标定

在我们日常生活中有很多相机,如手机上的相机、数码相机及功能模块型相机等等,每一个相机的参数都是不同的,即相机拍出的照片的分辨率、模式等。假设我们在进行物体三维重建的时候,事先并不知道我们相机的矩阵参数,那么,我们就应当计算出相机的矩阵参数,这一个步骤就叫做相机的标定。相机标定的相关原理我就不介绍了,网上很多人都讲解的挺详细的。其标定的具体实现如下:

defcamera_calibration(ImagePath):#循环中断criteria=(cv2.TERM_CRITERIA_EPS+cv2.TERM_CRITERIA_MAX_ITER,30,0.001)#棋盘格尺寸(棋盘格的交叉点的个数)row=11column=8objpoint=np.zeros((row*column,3),np.float32)objpoint[:,:2]=np.mgrid[0:row,0:column].T.reshape(-1,2)objpoints=[]#3dpointinrealworldspaceimgpoints=[]#2dpointsinimageplane.batch_images=glob.glob(ImagePath+'/*.jpg')fori,fnameinenumerate(batch_images):img=cv2.imread(batch_images[i])imgGray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)#findchessboardcornersret,corners=cv2.findChessboardCorners(imgGray,(row,column),None)#iffound,addobjectpoints,imagepoints(afterrefiningthem)ifret:objpoints.append(objpoint)corners2=cv2.cornerSubPix(imgGray,corners,(11,11),(-1,-1),criteria)imgpoints.append(corners2)#Drawanddisplaythecornersimg=cv2.drawChessboardCorners(img,(row,column),corners2,ret)cv2.imwrite('Checkerboard_Image/Temp_JPG/Temp_'+str(i)+'.jpg',img)print("成功提取:",len(batch_images),"张图片角点!")ret,mtx,dist,rvecs,tvecs=cv2.calibrateCamera(objpoints,imgpoints,imgGray.shape[::-1],None,None)其中,cv2.calibrateCamera函数求出的mtx矩阵即为K矩阵。

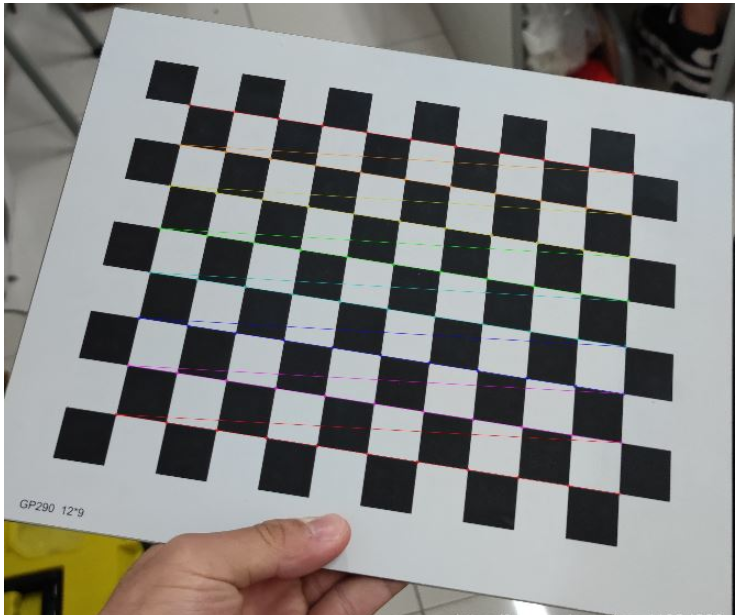

当修改好相应参数并完成标定后,我们可以输出棋盘格的角点图片来看看是否已成功提取棋盘格的角点,输出角点图如下:

图1:棋盘格角点提取

(2)图像特征提取及匹配

在整个三维重建的过程中,这一步是最为关键的,也是最为复杂的一步,图片特征提取的好坏决定了你最后的重建效果。

在图片特征点提取算法中,有三种算法较为常用,分别为:SIFT算法、SURF算法以及ORB算法。通过综合分析对比,我们在这一步中采取SURF算法来对图片的特征点进行提取。三种算法的特征点提取效果对比如果大家感兴趣可以去网上搜来看下,在此就不逐一对比了。具体实现如下:

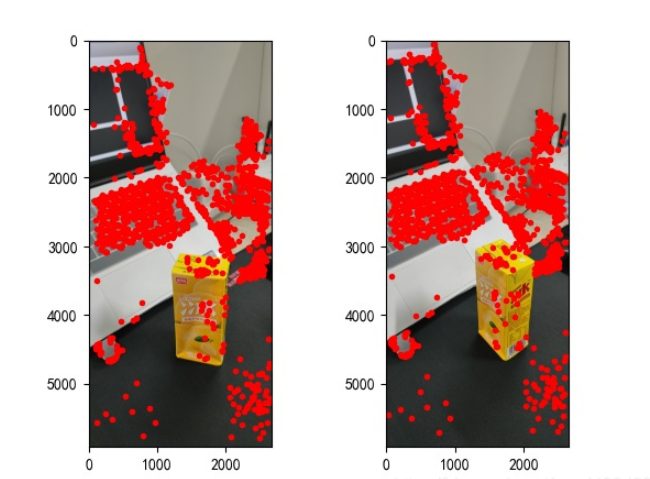

defepipolar_geometric(Images_Path,K):IMG=glob.glob(Images_Path)img1,img2=cv2.imread(IMG[0]),cv2.imread(IMG[1])img1_gray=cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)img2_gray=cv2.cvtColor(img2,cv2.COLOR_BGR2GRAY)#InitiateSURFdetectorSURF=cv2.xfeatures2d_SURF.create()#computekeypoint&descriptionskeypoint1,descriptor1=SURF.detectAndCompute(img1_gray,None)keypoint2,descriptor2=SURF.detectAndCompute(img2_gray,None)print("角点数量:",len(keypoint1),len(keypoint2))#Findpointmatchesbf=cv2.BFMatcher(cv2.NORM_L2,crossCheck=True)matches=bf.match(descriptor1,descriptor2)print("匹配点数量:",len(matches))src_pts=np.asarray([keypoint1[m.queryIdx].ptforminmatches])dst_pts=np.asarray([keypoint2[m.trainIdx].ptforminmatches])#plotknn_image=cv2.drawMatches(img1_gray,keypoint1,img2_gray,keypoint2,matches[:-1],None,flags=2)image_=Image.fromarray(np.uint8(knn_image))image_.save("MatchesImage.jpg")#Constrainmatchestofithomographyretval,mask=cv2.findHomography(src_pts,dst_pts,cv2.RANSAC,100.0)#Weselectonlyinlierpointspoints1=src_pts[mask.ravel()==1]points2=dst_pts[mask.ravel()==1]找到的特征点如下:

图2:特征点提取

(3)三维重建

我们找到图片的特征点并相互匹配后,则可以开始进行三维重建了,具体实现如下:

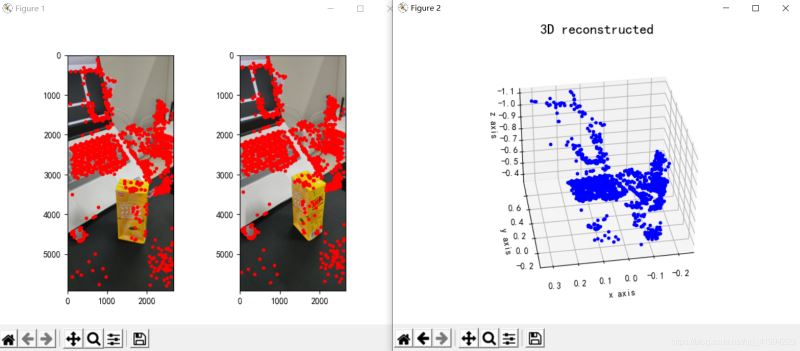

points1=cart2hom(points1.T)points2=cart2hom(points2.T)#plotfig,ax=plt.subplots(1,2)ax[0].autoscale_view('tight')ax[0].imshow(cv2.cvtColor(img1,cv2.COLOR_BGR2RGB))ax[0].plot(points1[0],points1[1],'r.')ax[1].autoscale_view('tight')ax[1].imshow(cv2.cvtColor(img2,cv2.COLOR_BGR2RGB))ax[1].plot(points2[0],points2[1],'r.')plt.savefig('MatchesPoints.jpg')fig.show()#points1n=np.dot(np.linalg.inv(K),points1)points2n=np.dot(np.linalg.inv(K),points2)E=compute_essential_normalized(points1n,points2n)print('Computedessentialmatrix:',(-E/E[0][1]))P1=np.array([[1,0,0,0],[0,1,0,0],[0,0,1,0]])P2s=compute_P_from_essential(E)ind=-1fori,P2inenumerate(P2s):#Findthecorrectcameraparametersd1=reconstruct_one_point(points1n[:,0],points2n[:,0],P1,P2)#ConvertP2fromcameraviewtoworldviewP2_homogenous=np.linalg.inv(np.vstack([P2,[0,0,0,1]]))d2=np.dot(P2_homogenous[:3,:4],d1)ifd1[2]>0andd2[2]>0:ind=iP2=np.linalg.inv(np.vstack([P2s[ind],[0,0,0,1]]))[:3,:4]Points3D=linear_triangulation(points1n,points2n,P1,P2)fig=plt.figure()fig.suptitle('3Dreconstructed',fontsize=16)ax=fig.gca(projection='3d')ax.plot(Points3D[0],Points3D[1],Points3D[2],'b.')ax.set_xlabel('xaxis')ax.set_ylabel('yaxis')ax.set_zlabel('zaxis')ax.view_init(elev=135,azim=90)plt.savefig('Reconstruction.jpg')plt.show()其重建效果如下(效果一般):

图3:三维重建

三、结论

从重建的结果来看,单目三维重建效果一般,我认为可能与这几方面因素有关:

(1)图片拍摄形式。如果是进行单目三维重建任务,在拍摄图片时最好保持平行移动相机,且最好正面拍摄,即不要斜着拍或特异角度进行拍摄;

(2)拍摄时周边环境干扰。选取拍摄的地点最好保持单一,减少无关物体的干扰;

(3)拍摄光源问题。选取的拍照场地要保证合适的亮度(具体情况要试才知道你们的光源是否达标),还有就是移动相机的时候也要保证前一时刻和此时刻的光源一致性。

其实,单目三维重建的效果确实一般,就算将各方面情况都拉满,可能得到的重建效果也不是特别好。或者我们可以考虑采用双目三维重建,双目三维重建效果肯定是要比单目的效果好的,在实现是也就麻烦一(亿)点点,哈哈。其实也没有多太多的操作,主要就是整两个相机拍摄和标定两个相机麻烦点,其他的都还好。

四、代码

importcv2importjsonimportnumpyasnpimportglobfromPILimportImageimportmatplotlib.pyplotaspltplt.rcParams['font.sans-serif']=['SimHei']plt.rcParams['axes.unicode_minus']=Falsedefcart2hom(arr):"""Convertcatesiantohomogenouspointsbyappendingarowof1s:paramarr:arrayofshape(num_dimensionxnum_points):returns:arrayofshape((num_dimension+1)xnum_points)"""ifarr.ndim==1:returnnp.hstack([arr,1])returnnp.asarray(np.vstack([arr,np.ones(arr.shape[1])]))defcompute_P_from_essential(E):"""Computethesecondcameramatrix(assumingP1=[I0])fromanessentialmatrix.E=[t]R:returns:listof4possiblecameramatrices."""U,S,V=np.linalg.svd(E)#Ensurerotationmatrixareright-handedwithpositivedeterminantifnp.linalg.det(np.dot(U,V))<0:V=-V#create4possiblecameramatrices(Hartleyp258)W=np.array([[0,-1,0],[1,0,0],[0,0,1]])P2s=[np.vstack((np.dot(U,np.dot(W,V)).T,U[:,2])).T,np.vstack((np.dot(U,np.dot(W,V)).T,-U[:,2])).T,np.vstack((np.dot(U,np.dot(W.T,V)).T,U[:,2])).T,np.vstack((np.dot(U,np.dot(W.T,V)).T,-U[:,2])).T]returnP2sdefcorrespondence_matrix(p1,p2):p1x,p1y=p1[:2]p2x,p2y=p2[:2]returnnp.array([p1x*p2x,p1x*p2y,p1x,p1y*p2x,p1y*p2y,p1y,p2x,p2y,np.ones(len(p1x))]).Treturnnp.array([p2x*p1x,p2x*p1y,p2x,p2y*p1x,p2y*p1y,p2y,p1x,p1y,np.ones(len(p1x))]).Tdefscale_and_translate_points(points):"""Scaleandtranslateimagepointssothatcentroidofthepointsareattheoriginandavgdistancetotheoriginisequaltosqrt(2).:parampoints:arrayofhomogenouspoint(3xn):returns:arrayofsameinputshapeanditsnormalizationmatrix"""x=points[0]y=points[1]center=points.mean(axis=1)#meanofeachrowcx=x-center[0]#centerthepointscy=y-center[1]dist=np.sqrt(np.power(cx,2)+np.power(cy,2))scale=np.sqrt(2)/dist.mean()norm3d=np.array([[scale,0,-scale*center[0]],[0,scale,-scale*center[1]],[0,0,1]])returnnp.dot(norm3d,points),norm3ddefcompute_image_to_image_matrix(x1,x2,compute_essential=False):"""Computethefundamentaloressentialmatrixfromcorrespondingpoints(x1,x23*narrays)usingthe8pointalgorithm.EachrowintheAmatrixbelowisconstructedas[x'*x,x'*y,x',y'*x,y'*y,y',x,y,1]"""A=correspondence_matrix(x1,x2)#computelinearleastsquaresolutionU,S,V=np.linalg.svd(A)F=V[-1].reshape(3,3)#constrainF.Makerank2byzeroingoutlastsingularvalueU,S,V=np.linalg.svd(F)S[-1]=0ifcompute_essential:S=[1,1,0]#Forcerank2andequaleigenvaluesF=np.dot(U,np.dot(np.diag(S),V))returnFdefcompute_normalized_image_to_image_matrix(p1,p2,compute_essential=False):"""Computesthefundamentaloressentialmatrixfromcorrespondingpointsusingthenormalized8pointalgorithm.:inputp1,p2:correspondingpointswithshape3xn:returns:fundamentaloressentialmatrixwithshape3x3"""n=p1.shape[1]ifp2.shape[1]!=n:raiseValueError('Numberofpointsdonotmatch.')#preprocessimagecoordinatesp1n,T1=scale_and_translate_points(p1)p2n,T2=scale_and_translate_points(p2)#computeForEwiththecoordinatesF=compute_image_to_image_matrix(p1n,p2n,compute_essential)#reversepreprocessingofcoordinates#WeknowthatP1'EP2=0F=np.dot(T1.T,np.dot(F,T2))returnF/F[2,2]defcompute_fundamental_normalized(p1,p2):returncompute_normalized_image_to_image_matrix(p1,p2)defcompute_essential_normalized(p1,p2):returncompute_normalized_image_to_image_matrix(p1,p2,compute_essential=True)defskew(x):"""Createaskewsymmetricmatrix*A*froma3dvector*x*.Property:np.cross(A,v)==np.dot(x,v):paramx:3dvector:returns:3x3skewsymmetricmatrixfrom*x*"""returnnp.array([[0,-x[2],x[1]],[x[2],0,-x[0]],[-x[1],x[0],0]])defreconstruct_one_point(pt1,pt2,m1,m2):"""pt1andm1*Xareparallelandcrossproduct=0pt1xm1*X=pt2xm2*X=0"""A=np.vstack([np.dot(skew(pt1),m1),np.dot(skew(pt2),m2)])U,S,V=np.linalg.svd(A)P=np.ravel(V[-1,:4])returnP/P[3]deflinear_triangulation(p1,p2,m1,m2):"""Lineartriangulation(Hartleych12.2pg312)tofindthe3DpointXwherep1=m1*Xandp2=m2*X.SolveAX=0.:paramp1,p2:2Dpointsinhomo.orcatesiancoordinates.Shape(3xn):paramm1,m2:Cameramatricesassociatedwithp1andp2.Shape(3x4):returns:4xnhomogenous3dtriangulatedpoints"""num_points=p1.shape[1]res=np.ones((4,num_points))foriinrange(num_points):A=np.asarray([(p1[0,i]*m1[2,:]-m1[0,:]),(p1[1,i]*m1[2,:]-m1[1,:]),(p2[0,i]*m2[2,:]-m2[0,:]),(p2[1,i]*m2[2,:]-m2[1,:])])_,_,V=np.linalg.svd(A)X=V[-1,:4]res[:,i]=X/X[3]returnresdefwritetofile(dict,path):forindex,iteminenumerate(dict):dict[item]=np.array(dict[item])dict[item]=dict[item].tolist()js=json.dumps(dict)withopen(path,'w')asf:f.write(js)print("参数已成功保存到文件")defreadfromfile(path):withopen(path,'r')asf:js=f.read()mydict=json.loads(js)print("参数读取成功")returnmydictdefcamera_calibration(SaveParamPath,ImagePath):#循环中断criteria=(cv2.TERM_CRITERIA_EPS+cv2.TERM_CRITERIA_MAX_ITER,30,0.001)#棋盘格尺寸row=11column=8objpoint=np.zeros((row*column,3),np.float32)objpoint[:,:2]=np.mgrid[0:row,0:column].T.reshape(-1,2)objpoints=[]#3dpointinrealworldspaceimgpoints=[]#2dpointsinimageplane.batch_images=glob.glob(ImagePath+'/*.jpg')fori,fnameinenumerate(batch_images):img=cv2.imread(batch_images[i])imgGray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)#findchessboardcornersret,corners=cv2.findChessboardCorners(imgGray,(row,column),None)#iffound,addobjectpoints,imagepoints(afterrefiningthem)ifret:objpoints.append(objpoint)corners2=cv2.cornerSubPix(imgGray,corners,(11,11),(-1,-1),criteria)imgpoints.append(corners2)#Drawanddisplaythecornersimg=cv2.drawChessboardCorners(img,(row,column),corners2,ret)cv2.imwrite('Checkerboard_Image/Temp_JPG/Temp_'+str(i)+'.jpg',img)print("成功提取:",len(batch_images),"张图片角点!")ret,mtx,dist,rvecs,tvecs=cv2.calibrateCamera(objpoints,imgpoints,imgGray.shape[::-1],None,None)dict={'ret':ret,'mtx':mtx,'dist':dist,'rvecs':rvecs,'tvecs':tvecs}writetofile(dict,SaveParamPath)meanError=0foriinrange(len(objpoints)):imgpoints2,_=cv2.projectPoints(objpoints[i],rvecs[i],tvecs[i],mtx,dist)error=cv2.norm(imgpoints[i],imgpoints2,cv2.NORM_L2)/len(imgpoints2)meanError+=errorprint("totalerror:",meanError/len(objpoints))defepipolar_geometric(Images_Path,K):IMG=glob.glob(Images_Path)img1,img2=cv2.imread(IMG[0]),cv2.imread(IMG[1])img1_gray=cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)img2_gray=cv2.cvtColor(img2,cv2.COLOR_BGR2GRAY)#InitiateSURFdetectorSURF=cv2.xfeatures2d_SURF.create()#computekeypoint&descriptionskeypoint1,descriptor1=SURF.detectAndCompute(img1_gray,None)keypoint2,descriptor2=SURF.detectAndCompute(img2_gray,None)print("角点数量:",len(keypoint1),len(keypoint2))#Findpointmatchesbf=cv2.BFMatcher(cv2.NORM_L2,crossCheck=True)matches=bf.match(descriptor1,descriptor2)print("匹配点数量:",len(matches))src_pts=np.asarray([keypoint1[m.queryIdx].ptforminmatches])dst_pts=np.asarray([keypoint2[m.trainIdx].ptforminmatches])#plotknn_image=cv2.drawMatches(img1_gray,keypoint1,img2_gray,keypoint2,matches[:-1],None,flags=2)image_=Image.fromarray(np.uint8(knn_image))image_.save("MatchesImage.jpg")#Constrainmatchestofithomographyretval,mask=cv2.findHomography(src_pts,dst_pts,cv2.RANSAC,100.0)#Weselectonlyinlierpointspoints1=src_pts[mask.ravel()==1]points2=dst_pts[mask.ravel()==1]points1=cart2hom(points1.T)points2=cart2hom(points2.T)#plotfig,ax=plt.subplots(1,2)ax[0].autoscale_view('tight')ax[0].imshow(cv2.cvtColor(img1,cv2.COLOR_BGR2RGB))ax[0].plot(points1[0],points1[1],'r.')ax[1].autoscale_view('tight')ax[1].imshow(cv2.cvtColor(img2,cv2.COLOR_BGR2RGB))ax[1].plot(points2[0],points2[1],'r.')plt.savefig('MatchesPoints.jpg')fig.show()#points1n=np.dot(np.linalg.inv(K),points1)points2n=np.dot(np.linalg.inv(K),points2)E=compute_essential_normalized(points1n,points2n)print('Computedessentialmatrix:',(-E/E[0][1]))P1=np.array([[1,0,0,0],[0,1,0,0],[0,0,1,0]])P2s=compute_P_from_essential(E)ind=-1fori,P2inenumerate(P2s):#Findthecorrectcameraparametersd1=reconstruct_one_point(points1n[:,0],points2n[:,0],P1,P2)#ConvertP2fromcameraviewtoworldviewP2_homogenous=np.linalg.inv(np.vstack([P2,[0,0,0,1]]))d2=np.dot(P2_homogenous[:3,:4],d1)ifd1[2]>0andd2[2]>0:ind=iP2=np.linalg.inv(np.vstack([P2s[ind],[0,0,0,1]]))[:3,:4]Points3D=linear_triangulation(points1n,points2n,P1,P2)returnPoints3Ddefmain():CameraParam_Path='CameraParam.txt'CheckerboardImage_Path='Checkerboard_Image'Images_Path='SubstitutionCalibration_Image/*.jpg'#计算相机参数camera_calibration(CameraParam_Path,CheckerboardImage_Path)#读取相机参数config=readfromfile(CameraParam_Path)K=np.array(config['mtx'])#计算3D点Points3D=epipolar_geometric(Images_Path,K)#重建3D点fig=plt.figure()fig.suptitle('3Dreconstructed',fontsize=16)ax=fig.gca(projection='3d')ax.plot(Points3D[0],Points3D[1],Points3D[2],'b.')ax.set_xlabel('xaxis')ax.set_ylabel('yaxis')ax.set_zlabel('zaxis')ax.view_init(elev=135,azim=90)plt.savefig('Reconstruction.jpg')plt.show()if__name__=='__main__':main() </div> <div class="zixun-tj-product adv-bottom"></div> </div> </div> <div class="prve-next-news">基于python怎么实现单目三维重建的详细内容,希望对您有所帮助,信息来源于网络。